General Info

Undergraduate Capstone

This app was developed as my undergraduate capstone project. At NYU ITP/IMA, this constitutes a structured one-semester project that demonstrates the theoretical and practical techniques a student has learned during their undergraduate career. Each student can decide what they wish to pursue to meet that requirement, and I set my aims quite high: having had a lot of previous experience in both UX design processes and mobile development, I set out to fully ideate, design, build, and user test a public-ready mobile platform for creating and viewing world-scale augmented reality experiences, with a focus on creating historical walking tours. It took plenty of hard work and late nights, but I did complete almost all of the features I set out to implement, and have plans to release the app by the end of the summer.

Thesis Week Presentation

As part of the capstone process, each student gives a ten minute TED-style presentation during Thesis Week at the end of the semester to publicly share the work that has been done. Here is an embed of my presentation:

Developing the Idea

Inspiration

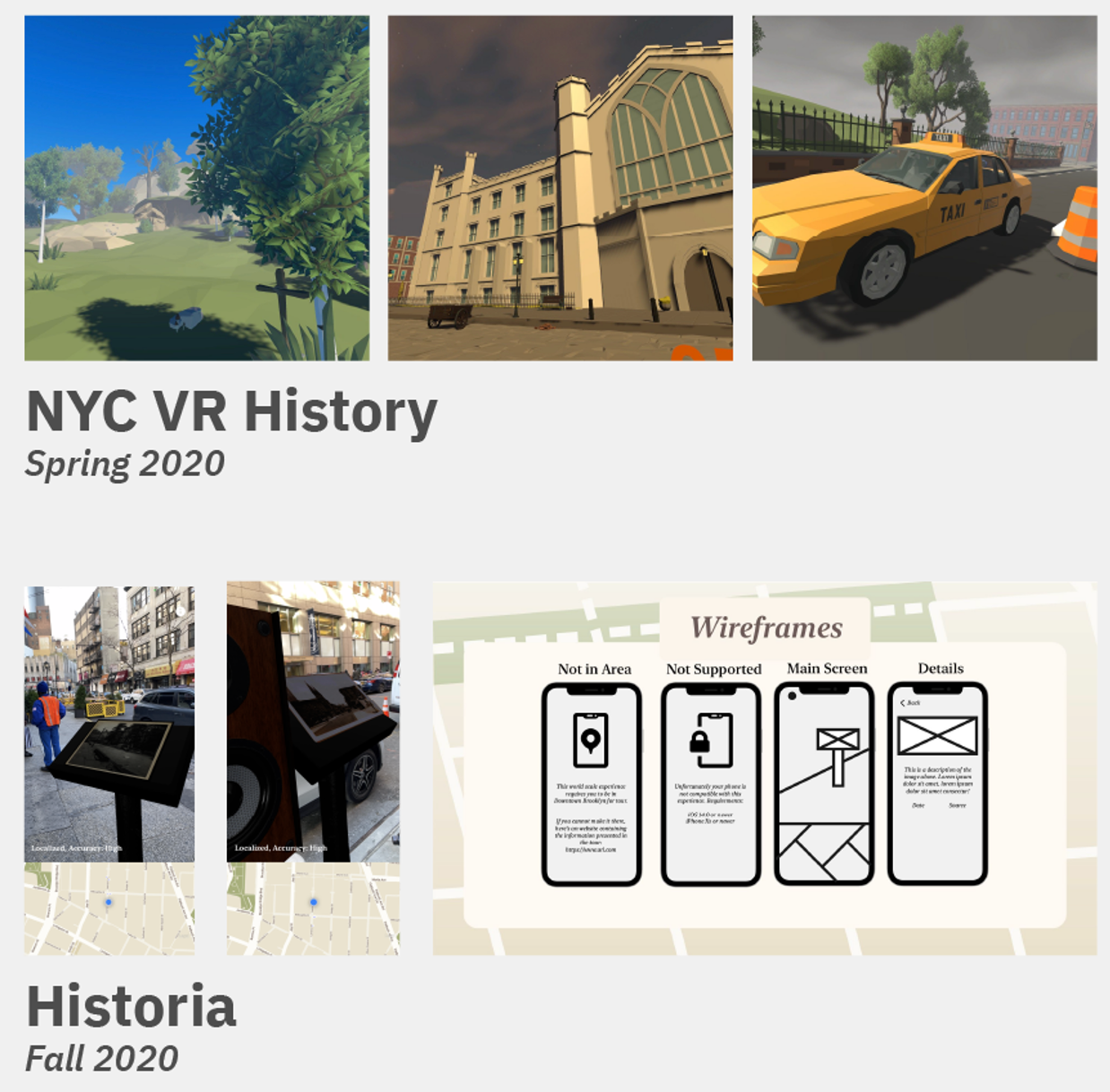

Over the past few years I've developed somewhat of a personal project theme of using experimental technologies to document the ever-changing cityscape of New York City. I worked on a VR-based experience (see image below) that used the immersive nature of virtual reality to create a three-scene historical experience centered on the changing streetscape witnessed by a single street corner by Washington Square Park over the course of four hundred years and an AR-based experimental world-scale historical walking tour prototype. For my undergraduate thesis project, I wanted to take what I've learned from these past projects and develop an actual, fully released, public platform in the space.

User Research

Competitive Analysis

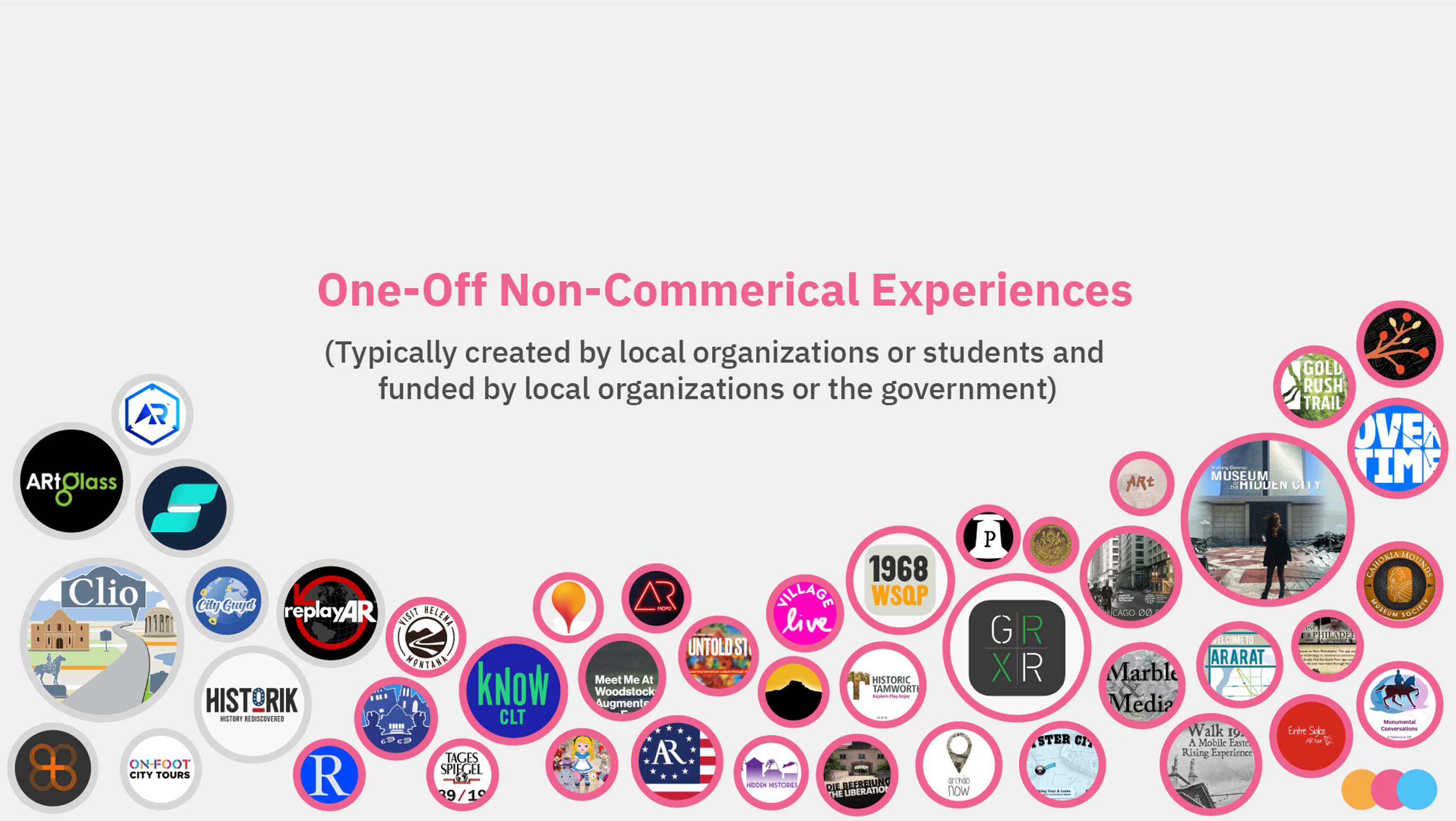

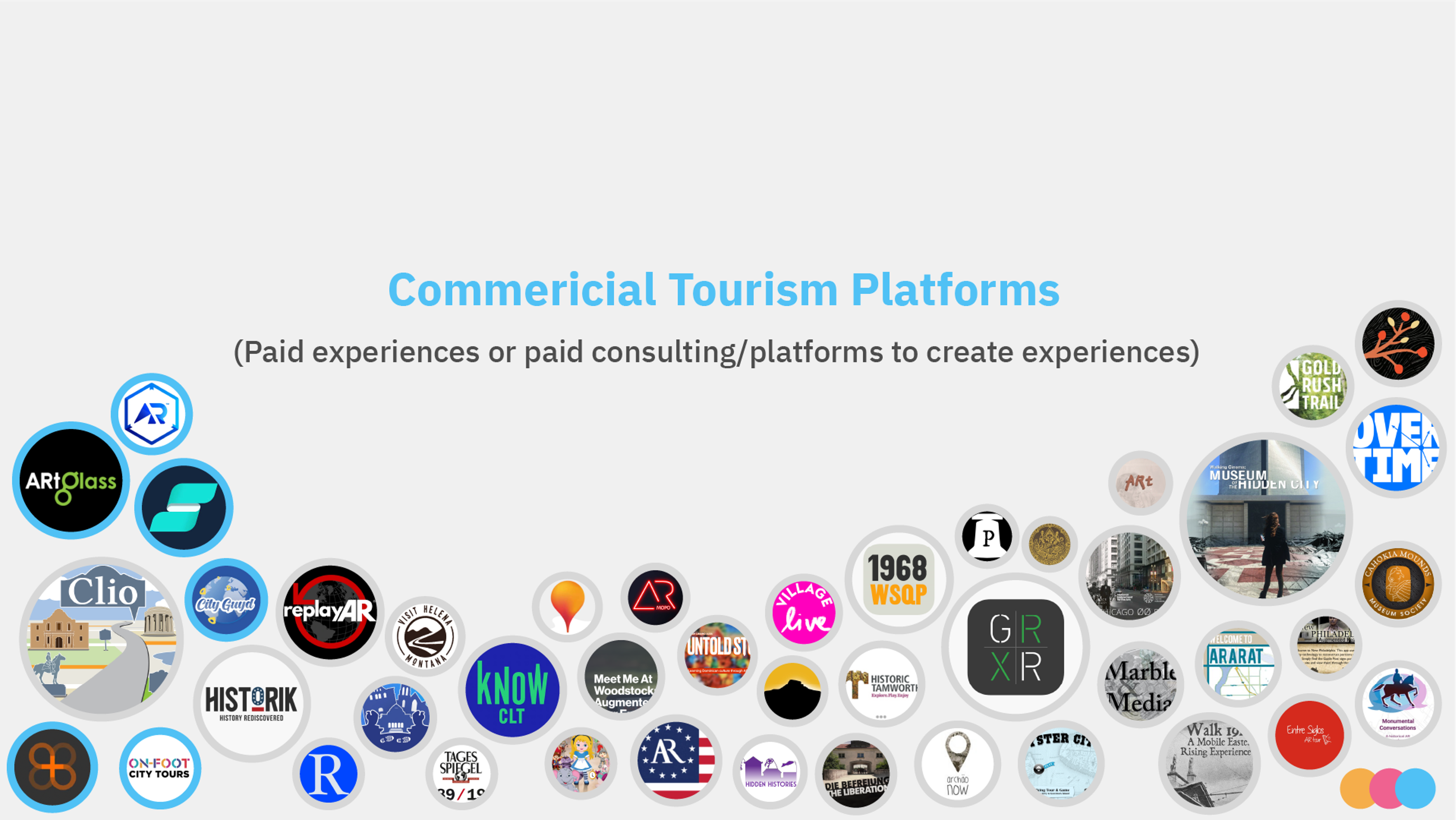

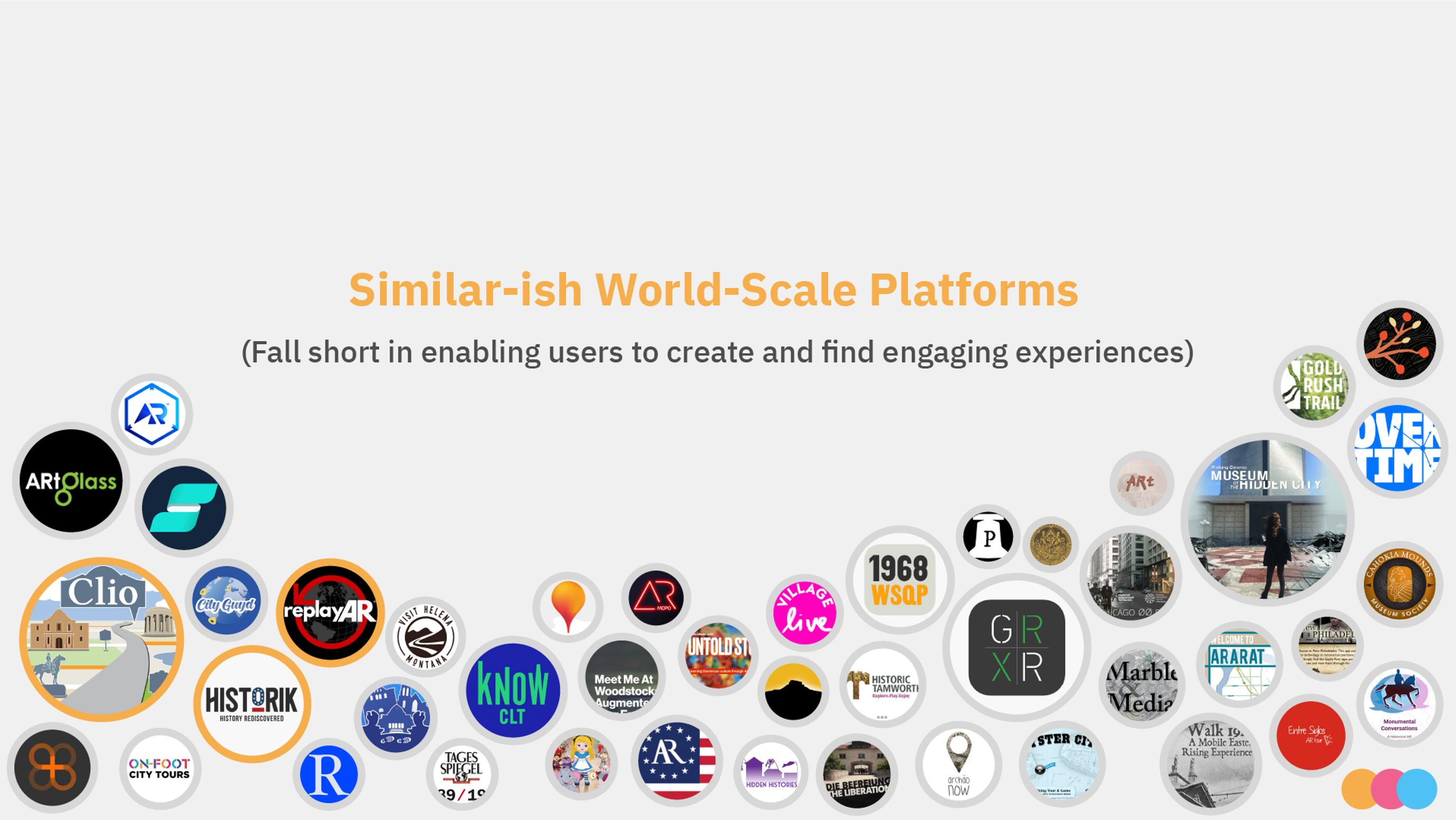

I began the creation process with an analysis of different apps and experiences that currently exist in the world-scale historical AR space. I found that there were generally three types of apps: one-off non-profit experiences typically covering a small area with a specific topic, commercial tourism platforms either sold directly to tourists or with consulting fees to tourism organizations, and a very limited number world-scale platforms that are generally in the ballpark of what I was imagining for this app. Below are the three slides created to show the rough numerical distribution of these apps.

This analysis led to an array of important findings that shaped the direction of the project moving forward:

- Most of the work done within this space has been the product of pioneering institutional organizations using external funding sources to innovate their methodologies of teaching, as publicly available funding documents shows development costs for a single tour can range from 5 to 7 digits for development.

- Work done by smaller, unfunded organizations tends to lack modern AR feature sets, disregards standard interface design practices, and is often left abandoned once the organization moves on to other projects.

- The world-scale platforms that did exist were lacking in both the ease of finding and the ability to create content. Most only allowed for the posting of single photos– no models, video, audio, or text– and there were virtually no systems in place for finding experiences near you.

Initial User Interviews

I created a set of questions to ask an assortment of other students, professors, and friends who I thought might be interested in the app. These questions included:

- What applications or mediums do you currently use for creative creation? What do you like about them?

- What experiences have you had sharing or learning about personal or communal histories before?

- How often do you think about the history of the built environment around you?

- Do you have any notable experiences, good or bad, with no-code creation tools?

- Have you used mobile augmented reality before? What was your opinion on it?

Walking Tour Prototype

Once I completed the competitive analysis and user interviews, I embarked on creating my own non-digital prototype of a world-scale experience to understand firsthand the process of walking tour creation and viewing. I consulted with a number of historical accounts of the original Dutch settlement of New Amsterdam, from which I selected 17 stops that illustrated routine daily life within the colony. I went in person to follow the route of the tour, noting different emotions and thoughts I had along the way. Each stop consisted of reading some informational text I had written on my phone and tapping around on the screen/moving my phone to simulate interaction. Below is a map of the walking route and stops.

Feature List

Combining what I've learned from competitive analysis and the prototype tour with previous experience in the area, I created a list of all the major features that I planned to integrate into the finished platform (see below). This would serve as the starting point for interface creation.

- Selection screens for walks near you and all walks, in a list view and map view

- AR View of all published places near the user's location

- User customization of walk font and accompanying illustration

- Estimated walk length and time, including elevation changes if possible

- On-screen arrows when entering/leaving stops and enlarging the overhead map between stops to assist with navigation throughout the tour

- Use of the ARGeoAnchor API to allow for the 3D content to integrate itself seamlessly into the built environment, rather than sticking out on top as in most other tours

- Mobile workflow for creating your own walks by assembling pre-made 3D assets, images, audio, text, and video around virtual GeoAnchors ('places'), then arranging the places in order

- Mobile workflow for creating individual places outside of walks, adding them to walks later

- Ability for a user to publish/hide their own tours at any time

- A scale within the interface to show textual support for histories (single text, multiple text, oral history, individual story, fantasy)

- A system for reporting tours or places that violate community guidelines or contain copyrighted materials

UI Design

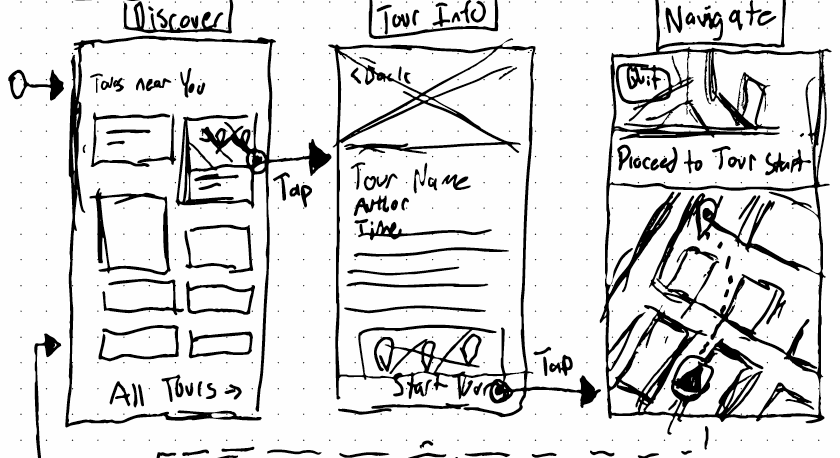

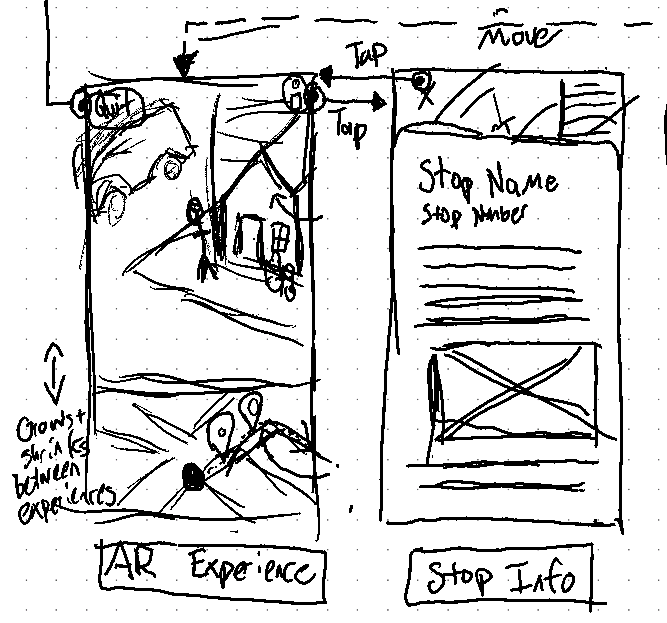

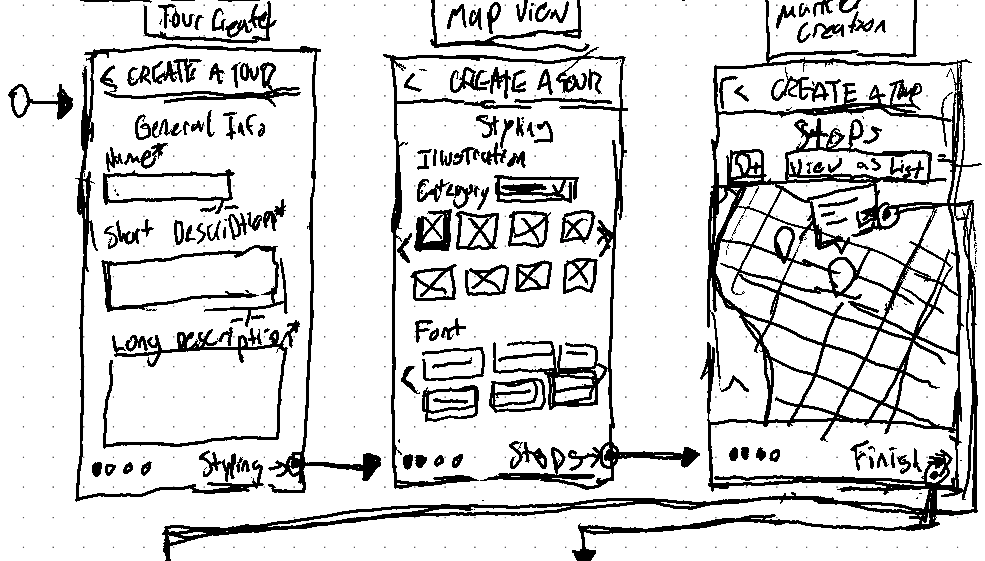

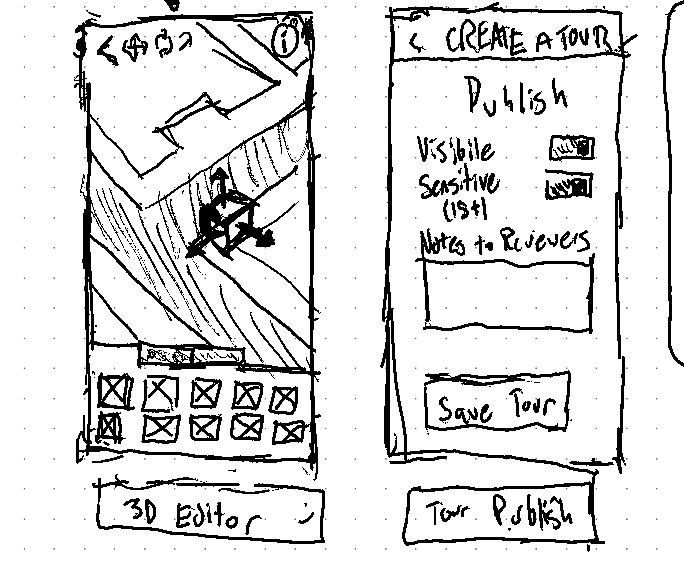

Wireframes and User Flow

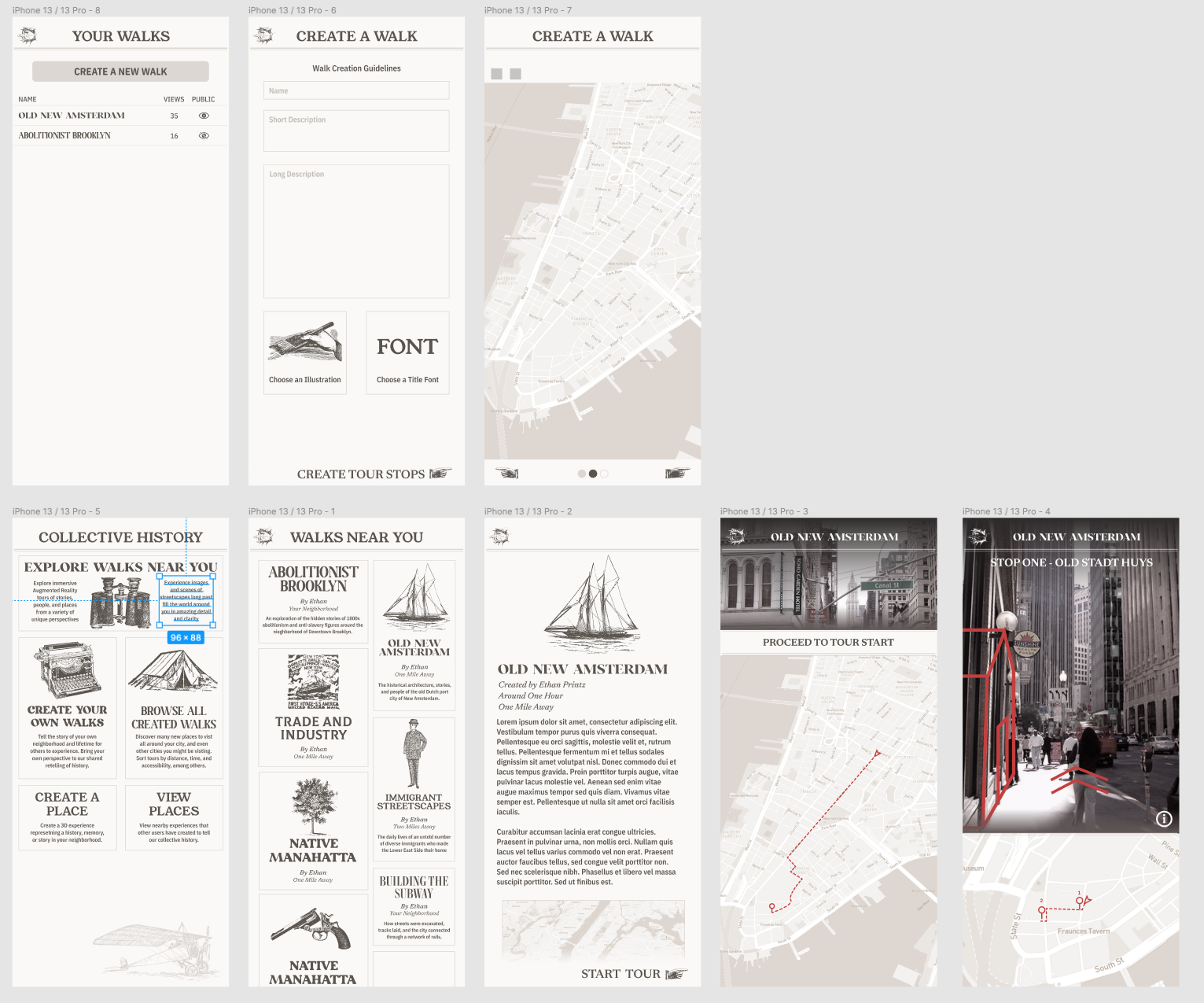

The next step in the creation process was the transformation of the basic feature list and user research into more solidified user journeys and wireframes. These started with rough sketches in my digital notebook, a few of which can be seen below. I focused my efforts most on ideating and iterating the major pages (AR Experience, Discover, and 3D editor).

Visual Styling

From these sketches I moved to create UI mockups in Figma. I first made the interfaces in a style inspired by mid to late 1800s advertising catalogs (see below), which I found to be a novel aesthetic paradigm that highlighted the historic nature of the experiences. But further user testing on the high-fidelity prototype with my family members over spring break determined (among other things) that they actually preferred to be able to determine their own unique aesthetic styles on a per-walk basis: this would allow them to recreate more modern memories/stories instead of being visually railroaded into the more distant past.

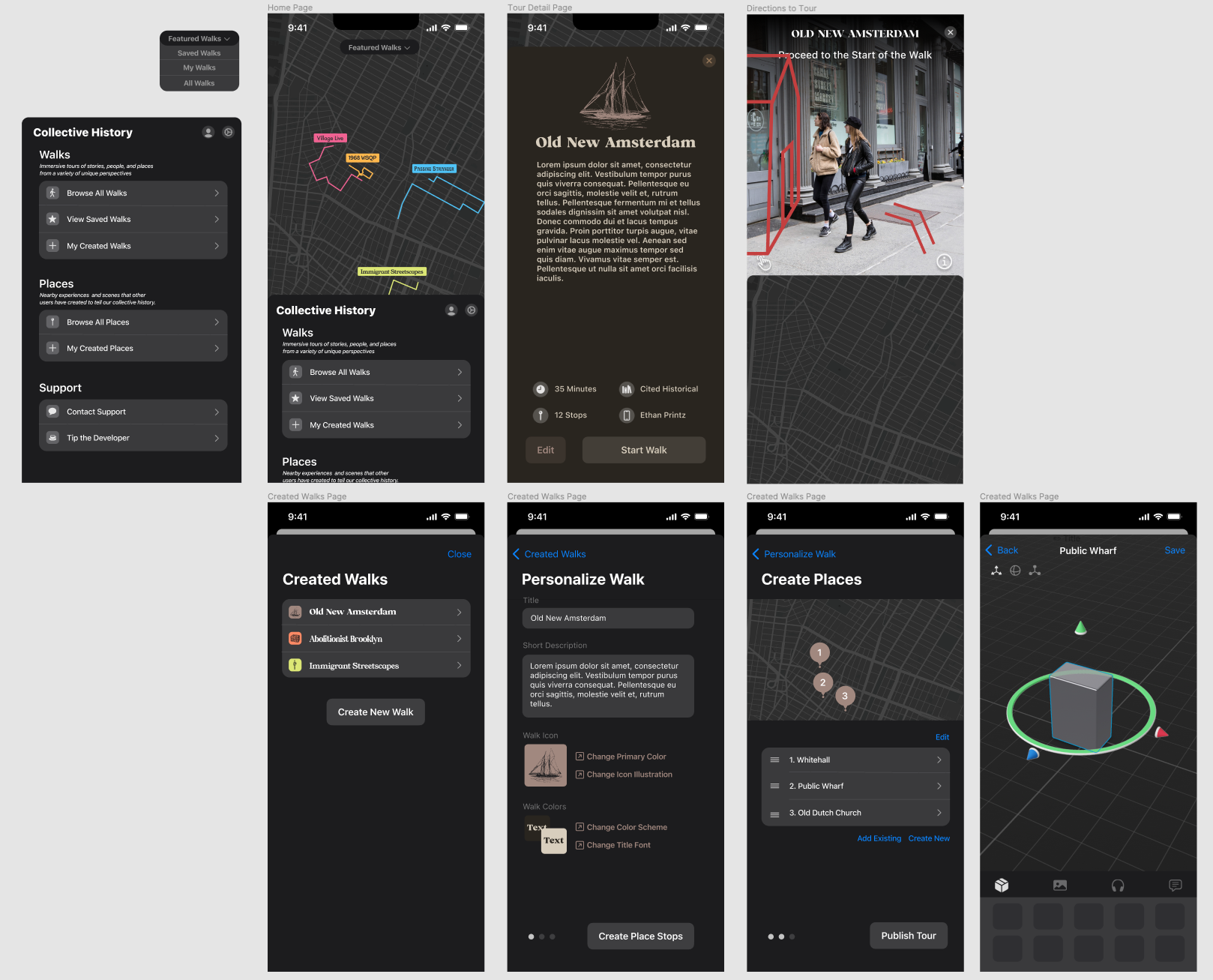

With this feedback in hand, I decided to fully redo all previously designed screens in a more native iOS design style. This ground-up redesign also allowed for a rethinking of previously constructed screens, most notably the home screen. It was redesigned to prioritize the visibility of walks near you above all else, with a map taking up a majority of the home screen and the walks being colorful overlays on an otherwise colorless home screen.

Development

Tech Stack

The platform was developed using native Swift and Xcode's tooling. The majority of the interface was written using SwiftUI, with the maps and AR view written using UIKit. In terms of APIs used throughout the project, MapKit was used for the maps, CloudKit for the server backend. and ARKit/RealityKit for augmented reality. The choice of using an all-native-Apple stack was one that took into consideration both cost and functionality. Being a college student with almost no budget, reducing overhead costs were a priority and by leveraging Apple's technologies it was assured that there'd be virtually no costs associated with mapping, server, or AR processing. It was also a necessity for supporting the newer ARGeoAnchor API, which allows for hyper-precise localization in world space as that was not yet supported by ARFoundation or other third-party frameworks.

Screen Order Planning

As the various components of the platform were highly interdependent, leveraging data that'd be difficult to generate without a custom interface for it, I ended up developing the various screens of the app in the order they would be reached by the user during the user flow for walk creation. This allowed for the immediate usage of real server and data querying, as it would be generated by the previous step in the process. The order of development was as follows: Home screen bottom sheet > Map > Personalize walk > Place list > Place Editor > Walk List > Walk Detail > Augmented Reality View.

Interesting Code Snippets

While the full codebase is tens of thousands of lines long, there are some particularly interesting snippets that I could highlight to go over the primary features and innovations of the app. First is the placement of the AR GeoAnchors themselves, which for all the complex work being done on the backend is a delightfully simple function:

// Get data for ARGeoAnchors from server

Task {

do {

let placeRecords = walkDetailViewModel.selectedWalk?.places ?? []

for placeRecord in placeRecords {

// Get Place from server

let place = try await CloudKitManager.shared.getIndividualPlace(recordID: placeRecord.recordID)

// Generate ARGeoAnchor from place

let geoAnchor: ARGeoAnchor = ARGeoAnchor(

name: place.name,

coordinate: CLLocationCoordinate2D(latitude: place.coordinate.coordinate.latitude, longitude: place.coordinate.coordinate.longitude))

// Append ARGeoAnchor to viewModel array

// Add objects to GeoAnchor

viewModel.places.append(place)

viewModel.geoAnchors.append(geoAnchor)

}

} catch {

print("Error retrieving place records")

}

}

...

// Called upon adding a GeoAnchor to the ARSession

func session(_ session: ARSession, didAdd anchors: [ARAnchor]) {

for geoAnchor in anchors.compactMap({ $0 as? ARGeoAnchor }) {

// Effect a spatial-based delay to avoid blocking the main thread.

DispatchQueue.main.asyncAfter(deadline: .now() + (distanceFromDevice(coordinate: geoAnchor.coordinate, locationManager: locationManager) / 10)) {

let placeIndex = self.viewModel.places.firstIndex(where: { (Place) -> Bool in Place.name == geoAnchor.name})!

let place: CHPlace = self.viewModel.places[placeIndex]

Task {

let modelObjects = try await CloudKitManager.shared.getModelObjectsByPlace(recordID: place.id)

print("Model Objects: \(String(describing: modelObjects))")

// Add an GeoAnchor to scene

let geoAnchorEntity = AnchorEntity(anchor: geoAnchor)

for obj in modelObjects {

let entity = try! Entity.loadModel(named: obj.assetName)

entity.scale = [obj.assetScale, obj.assetScale, obj.assetScale]

geoAnchorEntity.addChild(entity)

}

self.arView!.scene.addAnchor(geoAnchorEntity)

}

}

}

}

Also interesting is the home view, which ended up being a series of bottom sheets that are conditionally displayed depending on the app state (whether location services are enabled, whether the user has an account, etc.) This provided a consistent navigational experience that centered around the home map screen as the primary method of finding content near you.

// MARK: - Interactive Map Background

HomeMapView()

.onAppear {

homeViewModel.checkIfHasSeenOnboard()

}

// MARK: - Draggable Bottom Sheet

.bottomSheet(bottomSheetPosition: $homeViewModel.bottomSheetPosition,

options:[

.dragIndicatorColor(.backgroundSecondary),

.background({ AnyView(Color.absoluteGrey) })

],

headerContent: { HomeViewBottomSheetHeader() },

mainContent: { HomeViewBottomSheetMain() })

// MARK: - Onboarding Sheet

.sheet(isPresented: $homeViewModel.isShowingOnboardView){

OnboardView()

}

// MARK: - Profile Setup Sheet

.sheet(isPresented: $homeViewModel.isShowingProfileView){

ProfileSetupView().environmentObject(homeViewModel)

}

// MARK: - Location Authorization Sheet

.sheet(isPresented: $locationManager.showAuthorizationView){

LocationAuthorizationView()

}

// MARK: - Primary Navigation Sheet

.sheet(isPresented: $homeViewModel.isShowing){

NavigationView{ homeViewModel.sheetContent() }

.accentColor(Color.accentBlue)

.environmentObject(locationManager)

}

// Roughly equivalent to onAppear for network calls

.task {

try? await CloudKitManager.shared.getUserRecord()

homeViewModel.checkAccountStatus()

do {

homeViewModel.walkList = try await CloudKitManager.shared.getAllWalks()

} catch {

print("Cloudkit Manager could not get user record")

}

}

As an example of a more normal page, here is the tour detail view. It contains a lot of the basic MVVM features found throughout the app, and demonstrates the way that CloudKit integrates into the data querying process (sans-caching with CoreData, which I'm currently adding in the preparations to launch the app).

import SwiftUI

import CloudKit

import Drops

struct WalkDetailView: View {

@EnvironmentObject var walkManager: WalkManager

@StateObject var viewModel = WalkDetailViewModel()

let walkReferenceID: CKRecord.ID

var body: some View {

VStack {

ScrollView {

Image(viewModel.iconName)

.resizable()

.scaledToFit()

.foregroundColor(viewModel.accentColor)

.frame(width: 150, height: 150)

Text(viewModel.title)

.font(.custom(viewModel.fontName, size: 28))

.lineLimit(2)

.minimumScaleFactor(0.75)

.foregroundColor(viewModel.foregroundColor)

.frame(maxWidth: 320)

Text(viewModel.longDescription)

.font(.body)

.padding(.horizontal, 35)

.padding(.top, 8)

.foregroundColor(viewModel.foregroundColor)

}

HStack {

VStack {

TourDetail(iconSystemName: "clock.circle.fill",

propertyText: "\(viewModel.time) Minutes")

TourDetail(iconSystemName: "mappin.circle.fill",

propertyText: "\( String(describing: viewModel.selectedWalk?.places?.count ?? 0)) Stop\(viewModel.selectedWalk?.places?.count ?? 0 != 1 ? "s" : "")")

}

VStack {

TourDetail(iconSystemName: "books.vertical.circle.fill",

propertyText: "\(viewModel.veracityCategory.rawValue)")

TourDetail(iconSystemName: "person.crop.circle.fill",

propertyText: "Ethan Printz")

}

}

.padding(.horizontal, 35)

.padding(.bottom)

.environmentObject(viewModel)

HStack {

Button {

if viewModel.selectedWalk != nil {

// TODO: Change from dummy state

viewModel.isSavedToWalkManager = true

walkManager.userSavedWalks.append(viewModel.selectedWalk!)

}

} label: {

Image(systemName: viewModel.isSavedToWalkManager ? "star.fill" : "star")

.resizable()

.frame(width: 20, height: 20)

}

.buttonStyle(.bordered)

.tint(viewModel.accentColor)

.controlSize(.large)

NavigationLink(

destination: WalkEditorView(walkReference: walkReferenceID),

label: { Text("Edit").bold() }

)

.buttonStyle(.bordered)

.tint(viewModel.accentColor)

.controlSize(.large)

Button {

viewModel.isARViewPresented = true

} label: {

Text("Start Walk").bold()

}

.buttonStyle(.borderedProminent)

.tint(viewModel.accentColor)

.controlSize(.large)

}

.padding(.horizontal, 25)

}

.frame(maxWidth: .infinity)

.background(AnyView(viewModel.backgroundColor).edgesIgnoringSafeArea(.all))

.navigationBarTitle("")

.navigationBarTitleDisplayMode(.inline)

// AR View

.fullScreenCover(isPresented: $viewModel.isARViewPresented, content: ARWalkView.init).environmentObject(viewModel)

.task {

viewModel.walkManager = walkManager

viewModel.getSelectedWalk(

walkManager: walkManager,

walkReferenceID: walkReferenceID

)

// Secondarily re-load of information in case there have been changes

do {

viewModel.selectedWalk = try await CloudKitManager.shared.getIndividualWalk(recordID: walkReferenceID)

} catch {

Drops.show(Drop(title: "Error Getting Walk Info"))

}

}

}

}

GIFs of App

Capstone Finalized User Testing

User testing was implemented throughout the design and development process to make sure that I was continually improving the platform to be as user friendly as possible. Most of this testing was in the form of informal interviews and sessions with friends and family, though it was capped off with more formalized and well-documented rounds of user testing at the end of the capstone process with six acquaintances of college age with moderate technical abilities. Naturally this user testing base will need to be diversified considerably to certifiably answer the questions posed, but it nonetheless contributed a useful sample for evaluating this project. To measure the platform as it has been created against the initial research claim at the start of this process, I developed a series of user research questions on which I could survey participants. This testing focused on the walk creation process, as that was the crucial component for answering the question at hand. The creation process was divided into three sections: walk personalization, place editing, and the 3D editor. Each section had associated observation, interrogatory, and numerical testing guidelines associated with it.

Walk personalization usability was rated decently by users, with the majority of the feedback received pointing to a lack of desired personalization options. This can be easily remedied by adding more varied personalization options. A selection of the feedback given was as follows:

- Needs more un-professional styles for interface colors

- Walk creation guidelines should be at the top of the page

- Needs easier way to delete text (click to delete all button)

- Want more varied icons to select from

- The link-to-picker-page paradigm works well, intuitive to use

- User considers personalization options adequate (Average of 6x 1-10): 5.9

Place editing usability was rated slightly higher on average, though the primary issues changed from simply lacking content to a couple deeper interface design problems relating to the exact way that new stops were added to the list of stops. The observation and feedback included:

- Users had considerable difficulty figuring out how to add new places

- Interaction with map increased user confusion

- List reordering and deleting was universally understood

- User considers order of steps intuitive (Average of 6x 1-10): 6.5

The 3D editor, while not completely finished at the time of testing, was the most highly rated component tested in this project. It proved to be a unique experience for those testing the project, and was when many people had their 'lightbulb' moment for what they would really want to create on their own time.

- Users naturally try to drag-and-drop, might need to find way to make it work

- Users have intuitive sense for gestural control scheme

- Needs better organization paradigm for models

- Some users were confused by the headphones icon

- User considers the editor easy to use (Average of 6x 1-10): 7.33

While not a component of the formal user testing, the reception to the project at the ITP/IMA Spring Show proved to be an affirmation of the importance of such work. Many expressed excitement about the possible uses of the platform as it was designed and created, and requested to be sent a notification when it publicly releases.

Next Steps

While the large majority of major development work has been completed, there are still a few features to iron out relating to user content reporting, content rating, data caching, and general bug fixes in addition to finishing implementing the formal user interview feedback I received. I've been working on the project in some spare time over the summer, and currently expect to release by August.